Stochastic Average Gradient Method for training of linear models,. More...

#include <shark/Algorithms/Trainers/LinearSAGTrainer.h>

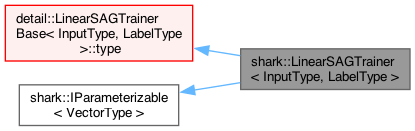

Inheritance diagram for shark::LinearSAGTrainer< InputType, LabelType >:

Inheritance diagram for shark::LinearSAGTrainer< InputType, LabelType >:Public Types | |

| typedef Base::ModelType | ModelType |

| typedef Base::WeightedDatasetType | WeightedDatasetType |

| typedef detail::LinearSAGTrainerBase< InputType, LabelType >::LossType | LossType |

Public Types inherited from shark::AbstractWeightedTrainer< Model, LabelTypeT > Public Types inherited from shark::AbstractWeightedTrainer< Model, LabelTypeT > | |

| typedef base_type::ModelType | ModelType |

| typedef base_type::InputType | InputType |

| typedef base_type::LabelType | LabelType |

| typedef base_type::DatasetType | DatasetType |

| typedef WeightedLabeledData< InputType, LabelType > | WeightedDatasetType |

Public Types inherited from shark::AbstractTrainer< Model, LabelTypeT > Public Types inherited from shark::AbstractTrainer< Model, LabelTypeT > | |

| typedef Model | ModelType |

| typedef ModelType::InputType | InputType |

| typedef LabelTypeT | LabelType |

| typedef LabeledData< InputType, LabelType > | DatasetType |

Public Types inherited from shark::IParameterizable< VectorType > Public Types inherited from shark::IParameterizable< VectorType > | |

| typedef VectorType | ParameterVectorType |

Public Member Functions | |

| LinearSAGTrainer (LossType const *loss, double lambda=0, bool offset=true) | |

| Constructor. | |

| std::string | name () const |

| From INameable: return the class name. | |

| void | train (ModelType &model, WeightedDatasetType const &dataset) |

| Executes the algorithm and trains a model on the given weighted data. | |

| std::size_t | epochs () const |

| Return the number of training epochs. A value of 0 indicates that the default of max(10, dimensionOfData) should be used. | |

| void | setEpochs (std::size_t value) |

| Set the number of training epochs. A value of 0 indicates that the default of max(10, dimensionOfData) should be used. | |

| double | lambda () const |

| Return the value of the regularization parameter lambda. | |

| void | setLambda (double lambda) |

| Set the value of the regularization parameter lambda. | |

| bool | trainOffset () const |

| Check whether the model to be trained should include an offset term. | |

| void | setTrainOffset (bool offset) |

| Sets whether the model to be trained should include an offset term. | |

| RealVector | parameterVector () const |

| Returns the vector of hyper-parameters(same as lambda) | |

| void | setParameterVector (RealVector const &newParameters) |

| Sets the vector of hyper-parameters(same as lambda) | |

| size_t | numberOfParameters () const |

| Returns the number of hyper-parameters. | |

| virtual void | train (ModelType &model, WeightedDatasetType const &dataset)=0 |

| Executes the algorithm and trains a model on the given weighted data. | |

| virtual void | train (ModelType &model, DatasetType const &dataset) |

| Executes the algorithm and trains a model on the given unweighted data. | |

Public Member Functions inherited from shark::INameable Public Member Functions inherited from shark::INameable | |

| virtual | ~INameable () |

Public Member Functions inherited from shark::ISerializable Public Member Functions inherited from shark::ISerializable | |

| virtual | ~ISerializable () |

| Virtual d'tor. | |

| virtual void | read (InArchive &archive) |

| Read the component from the supplied archive. | |

| virtual void | write (OutArchive &archive) const |

| Write the component to the supplied archive. | |

| void | load (InArchive &archive, unsigned int version) |

| Versioned loading of components, calls read(...). | |

| void | save (OutArchive &archive, unsigned int version) const |

| Versioned storing of components, calls write(...). | |

| BOOST_SERIALIZATION_SPLIT_MEMBER () | |

Public Member Functions inherited from shark::IParameterizable< VectorType > Public Member Functions inherited from shark::IParameterizable< VectorType > | |

| virtual | ~IParameterizable () |

Detailed Description

Stochastic Average Gradient Method for training of linear models,.

Given a differentiable loss function L(f, y) and a model f_j(x)= w_j^Tx+b this trainer solves the regularized risk minimization problem

\[ \min \frac{1}{2} \sum_j \frac{\lambda}{2}\|w_j\|^2 + \frac 1 {\ell} \sum_i L(y_i, f(x_i)), \]

where i runs over training data, j over the model outputs, and lambda > 0 is the regularization parameter.

The algorithm uses averaging of the algorithm to obtain a good estimate of the gradient. Averaging is performed by summing over the last gradient value obtained for each data point. At the beginning this estimate is far off as old gradient values are outdated, but as the algorithm converges, this gives linear convergence on strictly convex functions and O(1/T) convergence on not-strictly convex functions.

The algorithm supports classification and regresseion, dense and sparse inputs and weighted and unweighted datasets Reference: Schmidt, Mark, Nicolas Le Roux, and Francis Bach. "Minimizing finite sums with the stochastic average gradient." arXiv preprint arXiv:1309.2388 (2013).

Definition at line 91 of file LinearSAGTrainer.h.

Member Typedef Documentation

◆ LossType

| typedef detail::LinearSAGTrainerBase<InputType,LabelType>::LossType shark::LinearSAGTrainer< InputType, LabelType >::LossType |

Definition at line 98 of file LinearSAGTrainer.h.

◆ ModelType

| typedef Base::ModelType shark::LinearSAGTrainer< InputType, LabelType >::ModelType |

Definition at line 96 of file LinearSAGTrainer.h.

◆ WeightedDatasetType

| typedef Base::WeightedDatasetType shark::LinearSAGTrainer< InputType, LabelType >::WeightedDatasetType |

Definition at line 97 of file LinearSAGTrainer.h.

Constructor & Destructor Documentation

◆ LinearSAGTrainer()

|

inline |

Constructor.

- Parameters

-

loss (sub-)differentiable loss function lambda regularization parameter fort wo-norm regularization, 0 by default offset whether to train with offset/bias parameter or not, default is true

Definition at line 106 of file LinearSAGTrainer.h.

Member Function Documentation

◆ epochs()

|

inline |

Return the number of training epochs. A value of 0 indicates that the default of max(10, dimensionOfData) should be used.

Definition at line 125 of file LinearSAGTrainer.h.

◆ lambda()

|

inline |

Return the value of the regularization parameter lambda.

Definition at line 135 of file LinearSAGTrainer.h.

Referenced by shark::LinearSAGTrainer< InputType, LabelType >::setLambda().

◆ name()

|

inlinevirtual |

From INameable: return the class name.

Reimplemented from shark::INameable.

Definition at line 114 of file LinearSAGTrainer.h.

◆ numberOfParameters()

|

inlinevirtual |

Returns the number of hyper-parameters.

Reimplemented from shark::IParameterizable< VectorType >.

Definition at line 164 of file LinearSAGTrainer.h.

◆ parameterVector()

|

inlinevirtual |

Returns the vector of hyper-parameters(same as lambda)

Reimplemented from shark::IParameterizable< VectorType >.

Definition at line 151 of file LinearSAGTrainer.h.

◆ setEpochs()

|

inline |

Set the number of training epochs. A value of 0 indicates that the default of max(10, dimensionOfData) should be used.

Definition at line 130 of file LinearSAGTrainer.h.

Referenced by run().

◆ setLambda()

|

inline |

Set the value of the regularization parameter lambda.

Definition at line 139 of file LinearSAGTrainer.h.

References shark::LinearSAGTrainer< InputType, LabelType >::lambda().

◆ setParameterVector()

|

inlinevirtual |

Sets the vector of hyper-parameters(same as lambda)

Reimplemented from shark::IParameterizable< VectorType >.

Definition at line 157 of file LinearSAGTrainer.h.

References SIZE_CHECK.

◆ setTrainOffset()

|

inline |

Sets whether the model to be trained should include an offset term.

Definition at line 147 of file LinearSAGTrainer.h.

◆ train() [1/3]

|

inlinevirtual |

Executes the algorithm and trains a model on the given unweighted data.

This method behaves as using train with a weighted dataset where all weights are equal. The default implementation just creates such a dataset and executes the weighted version of the algorithm.

Reimplemented from shark::AbstractWeightedTrainer< Model, LabelTypeT >.

Definition at line 80 of file AbstractWeightedTrainer.h.

◆ train() [2/3]

|

inlinevirtual |

Executes the algorithm and trains a model on the given weighted data.

Implements shark::AbstractWeightedTrainer< Model, LabelTypeT >.

Definition at line 118 of file LinearSAGTrainer.h.

References shark::random::globalRng.

Referenced by run().

◆ train() [3/3]

|

virtual |

Executes the algorithm and trains a model on the given weighted data.

Implements shark::AbstractWeightedTrainer< Model, LabelTypeT >.

◆ trainOffset()

|

inline |

Check whether the model to be trained should include an offset term.

Definition at line 143 of file LinearSAGTrainer.h.

The documentation for this class was generated from the following file:

- include/shark/Algorithms/Trainers/LinearSAGTrainer.h